How to use custom robots.txt to make the crawl more efficient

As you know, you can use a custom robots.txt file when configuring a crawl. This is a useful option if, for example, you want to scan a staging website. But there are other advantages of using a custom robots.txt file during crawl settings. In this article, we will tell you how to make the most of this tool to get more insights from your crawl results.

What is a robots.txt file?

The robots.txt file is a text file located at the root of your website. This file contains directives for robots: search robots, advertising bots, marketing tools, etc. With the help of robots.txt, you can limit crawling, that is, the access of bots to various pages of your website. That is, a robots.txt file is a tool for controlling the crawling budget.

Using meta robots or X-Robots-Tag you can limit the indexing of pages, and using the disallow rules in the robots.txt file you can prohibit search engines from visiting certain pages.

When crawling your website, JetOctopus also follows the robots.txt file rules like a Googlebot. Before scanning, JetOctopus crawler goes to your website at the https://example.com/robots.txt address and remembers all the directives. If, for example, there is:

User-agent: *

Disallow: /category/

Then JetOctopus will not visit pages that contain /category/ and will not be able to crawl them.

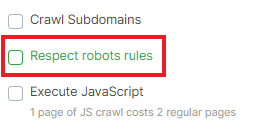

You can disable the “Respect robots rules” checkbox so that the JetOctopus crawler will scan all pages without considering robots.txt rules.

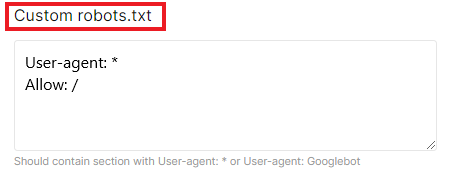

You can also add a custom robots.txt file to optimize the JetOctopus crawl. To do this, go to the advanced settings and add your file in the appropriate field.

Please pay attention to the fact that if you add a custom robots.txt file, it will only be used in the current crawl. The file is not stored anywhere and will not cause any changes to the robots.txt file on your website.

What can you use a custom robots.txt file for

You can use a custom robots.txt file to:

- crawl the staging website;

- crawl URLs that are blocked from scanning by search robots;

- check how the crawlability of the website will change for search engines if you allow or disallow certain types of URLs; in this way, you can check which new pages will be crawlable and which will not;

- to scan only the desired part of the pages.

By using various directives in the robots.txt file, you can manage your crawling budget more efficiently. You can also limit the load of bots on your web server.

There are often situations when search engines or marketing bots crawl your website too actively. At the same time, a certain search engine may not be the target for you. For example, you optimize your site for Google, but your website is constantly scanned by the Baidu robot, but you do not receive clicks and impressions from this search engine. Therefore, you can block certain types of pages for the Baidu crawler to reduce the load on the web server.

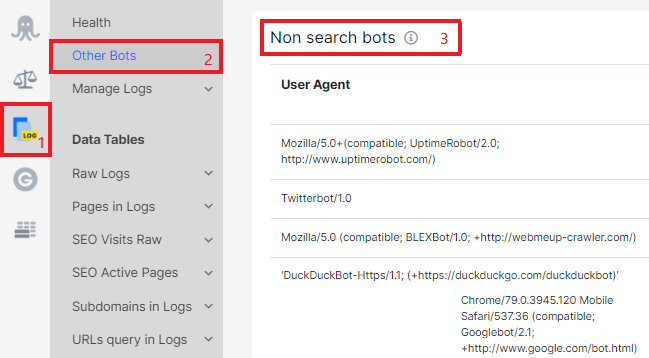

The same is the case with marketing bots: if they crawl your website too actively and create a heavy load, you can block the site from crawling for certain marketing tools. To determine the most active marketing bots, go to the “Logs” – “Other bots” section.

You can also check which pages will be crawled by Google if you add the “Disallow” rule in the robots.txt file. Let’s consider the situation with pages with only 1 inlink. If you block in the robots.txt file pages that contain links to URLs with only 1 inlink, search robots will not be able to access these pages. Accordingly, the frequency of scanning unblocked URLs will decrease.

When setting up custom robots.txt, pay attention to the following points:

- JetOctopus supports the same syntax as Google (/, *, etc.);

- you need to configure your robots.txt for each subdomain, but if you scan subdomains with JetOctopus, the custom robots.txt will be applied to all subdomains;

- custom robots.txt does not affect your website and does not change your robots.txt.

You may be interested in: 8 Common Robots.txt Mistakes and How to Avoid Them.