Logs Analysis For SEO Boost

Logs are the unique data that are 100% accurate to fully understanding how Googlebot crawls the website. Deep logs analysis can help to boost indexability and ranking, get valuable traffic, improve conversions and sales. Although Google search bot algorithms change constantly, log files analysis helps you to see long-term trends.

Recording of webinar about log analysis Watch now

In this article we will answer the following questions:

- What are logs and log analysis?

- How crawling budget could be optimized with the help of log analysis?

- How website’s indexability could be improved with the help of logs?

- Why is it important to scan logs while website migration?

Logs & log analysis

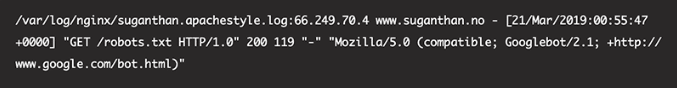

Log (log file) is a text data file, which contains systematic information about Google search bots visits. The file stores specific information about the visitor and the same goes for Google bot. So the process involves analysing the Google bot data. We can see what type of bot is hitting your page, top folders, top pages, etc.

Logs analysis involves processing of archival log files after at least a month of website work but ideally after half a year. Because some time it is needed to get the complete picture of search bot actions. Logs show you how often Googlebot visits your site, which web pages are indexed and which web pages are not. At the first glance, these data may seem unimportant, but if you analyse this information at least during a few months, you can estimate trends in your business comprehensively. Logs are really juicy data you should use for on-page optimization. Although Google search bot algorithms change constantly, log files analysis helps you to see long-term trends.

Log analysis for crawling budget optimization

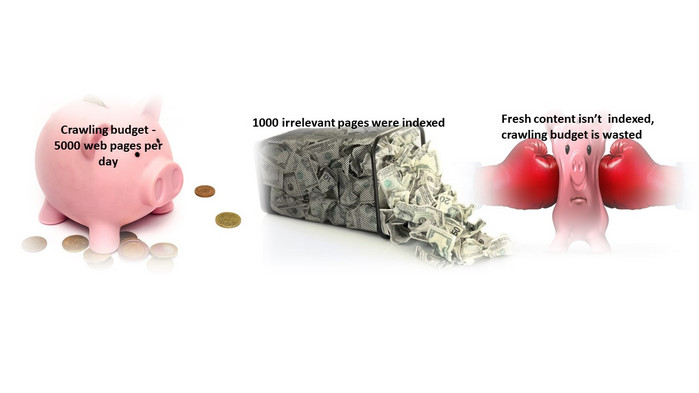

Today there are a lot of definitions for ‘crawling budget’ and it could be hard to find the right meaning. Google Webmaster Central Blog has published the article “What Crawl Budget Means for Googlebot” in which the or clarifies this term. In simple words, the crawling budget is the limited resource Googlebot is ready to spend on each website. In fact, Googlebot can waste a crawling budget on irrelevant webpages. For instance, you have a crawling budget of 5000 web pages per day.

In this case you want these 5000 webpages to be shown in organic search results, but instead Googlebot crawls 1000 irrelevant webpages. If the ling budget was wasted on useless URLs, relevant content won’t be indexed. That way log analysis helps you to understand where crawling budget is spending.

Analyze logs to improve website indexability

Let’s define the term ‘indexing’. In the SEO world it’s a process of adding web pages into Google search engine database. After search bot crawls a website and enters data to the index, it ranks your site in the organic search results. In Google Search Console you can find valuable crawl stats:

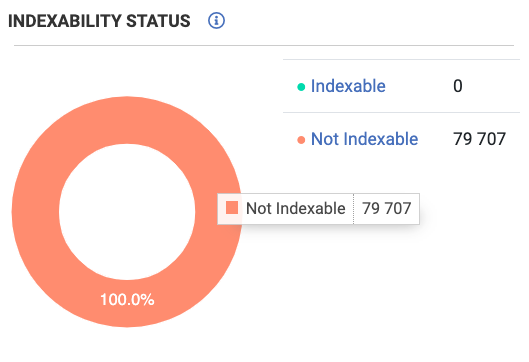

Nevertheless, GSC won’t give you info HOW search bot scans your site step-by-step. If you want to know for sure which pages were indexed, and which are not – try log analysis. Without indexing, your website has no chances of ranking.

Indexability сan vary for different types of websites, but if more than 80% are not indexable, you should check it. Otherwise, crawling budget can be wasted.

Log analysis helps to reduce the web migration’s negative impact

If your site migrates to a new CMS, log file analysis is the most crucial to avoid SEO drops. Unfortunately, website migration never goes without epic bugs. Log files help you to get rid of errors quickly before Google index them after website migration.

Of course, log analyzer is a crucial helper of every webmaster, but you will achieve real SEO boost if you combine log analysis with crawling. Web crawler is a tool that acts as a search bot. Crawler finds technical bugs, that should be removed for a successful migration. In other words, log analysis shows which pages aren’t indexed, crawling shows you why these web resources aren’t indexed. So, you fix bugs due to crawling data and check how your improvements work in logs.

To sum up:

- Logs analysis helps to ‘see’ your site like Googlebot

- Logs helps to optimize the crawling budget

- With the help of logs website’s indexing could be improved

- Log analysis helps to reduce the web migration’s negative impact

- Combination of log analysis and crawling gives the full picture of your site problems

Here is a great article about SEO tips and how to use Log analysis in 2019

Get more useful info: 8 Common Robots.txt Mistakes and How to Avoid Them