How to migrate a site without SEO drop. Case study

Such issues as broken links, error HTTP responses, and duplications often come out and cause SEO drops for months. The good news is that it’s still possible to move your site with minimum loss in website traffic. In this case, we would like to share with you a migration strategy that has worked well with one of our client’s website. This strategy can help you provide some useful tips on how to minimize risks during the site’s migration.

What’s inside:

- About the challenge

- Before the release

- After the release

- Results

- Bonus: SEO experts share their advice

About the challenges:

Our client’s website is an e-commerce platform with a ten-year history and 300,000 indexed pages. During this decade, teams of developers have changed the website’s structure so many times which resulted in the creation of numerous and unnecessary orphaned pages, redirects, and the generation of “Page not found 404” errors. The website became a Pandora’s box, so to speak.

In order to prevent the migration of these bugs onto a new version of the website, SEO methods are needed to find technical issues and to place more priority on their resolution. I asked SEO experts via social media on what technical parameters you should pay special attention to before migration and included useful pieces of advice in this case. During the after-launch phase, it was crucial to see how bots perceived the site on a new CMS to eliminate any technical issues that can potentially compromise the website’s reputation with Google.

Given the complexity of the challenge, a team of SEOs (together with JetOctopus tech geek Serge Bezborodov) developed a plan of migration. Fortunately, it worked very well! If you’re planning to move a website, you should consider the approach that was used in this case so you can benefit from this positive experience.

A site’s migration Win strategy

Before the release

1. Сlean up the mess in the structure

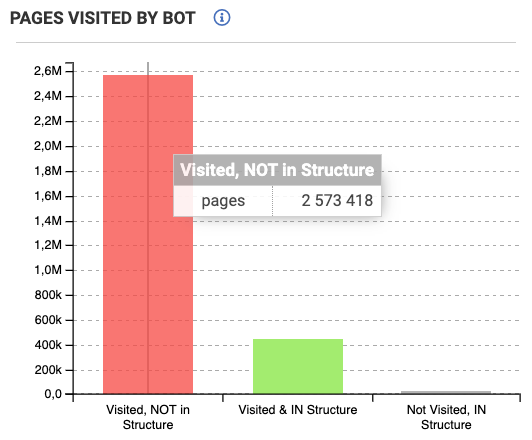

Before moving the content to a new CMS, it’s essential to get rid of useless data. To achieve this, SEOs are programmed to crawl the site to see the full structure and integrated historical log files to understand whether bots would perceive URLs as it was intended to do. The result was astonishing since there were 2,5 M of orphaned URLs:

What to do

Explore the content on orphaned pages and separate the useful URLs from non-profitable ones. Then return useful URLs in structure by inserting interlinks from relevant indexable pages. Don’t forget to get rid of useless content by closing it with noindex tags/robots.txt (depends on the volume of similar URLs). To avoid mistakes in directives, check out our guide on common robots.txt pitfalls.

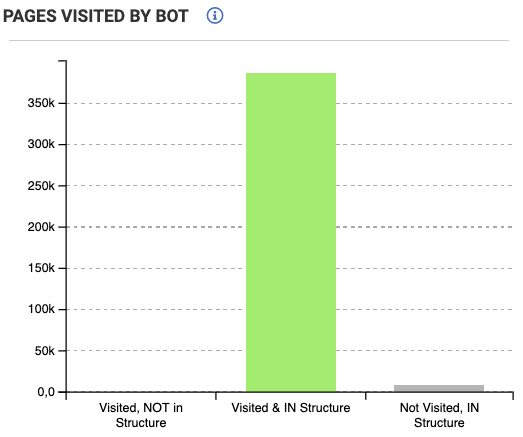

When developers followed these recommendations, the number of orphaned pages significantly decreased as shown below:

This proves the fact that you might not really know your website well enough since bots could spend their resources on URLs that you don’t even know exist.

2. Optimize internal links

Before launching a new version of a site, it’s crucial to check whether pages are linked properly. When developers open access to a website, bots start a crawl from the given page and discover new pages through the links. To get a more detailed explanation of the crawling and indexation process from Google’s blog, I provided a link.

Based on our yearlong research of 300M crawled pages, we found that the more links are on the page, the more often this page is visited and crawled by a bot. 1-10 links on a page of a big site aren’t enough for a site to be authoritative in Google’s eyes.

On our client’s website, there were 55K pages with only 1 internal link and 50K pages with less than 10 links.

What NOT to do

Don’t insert the extreme number of random links across your website.

What to do

Get back to the plan of the site’s structure and determine which links from a specific section that are best to insert to retain a more relevant structure. In case you might not know how to benefit from a visualized site’s structure, here is a good article that you can read which will show you how to organize your website in a way that clearly communicates topic relevance to search engines.

When SEOs of an analyzed site prioritize the list of URLs with poor interlinking structures and added relevant links on those pages, the number visits by bots would increase. There are still some poorly linked URLs that may still be left but these pages aren’t so important as the optimized ones and can wait until the high-priority SEO tasks are done.

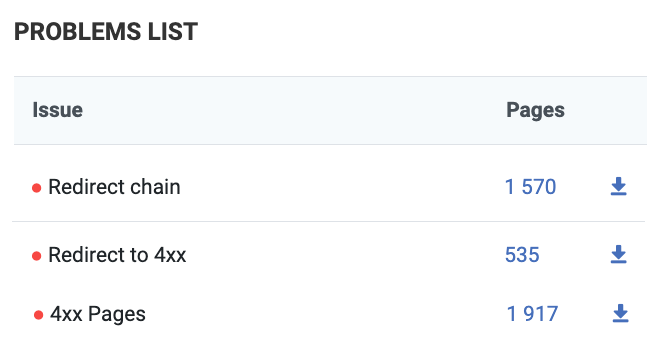

3. Keep calm and love redirects

Redirecting your pages correctly is one of the most crucial things that you can do to make site migration go smoothly. Broken redirects and chains are the most common issues with big e-commerce sites.

Many developers who have reconsidered the structure of a website make it more user-friendly and would redirect relevant pages from the old version of the website with permanent 301 Status Codes.

After the release

Yep! So far we’ve done a great deal of work but it’s too soon to celebrate victory. There are always some bugs that can appear unexpectedly after a site’s migration. But the earlier you find technical issues, the bigger the chances of avoiding an SEO drop.

1. Check whether everything is OK. Then come back and check again

Please remember that developers aren’t infallible robots. They are humans who make mistakes from time to time.

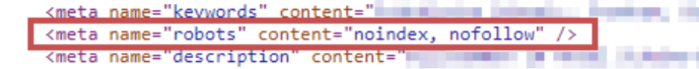

For instance, many developers working on our client’s site might sometimes forget to delete the meta tag “noindex” from a huge part of the site as shown in the following image:

Although these mistakes are sometimes inevitable, SEOs can quickly find these showstopper type of mistakes in a live stream of visits by bots in logs. This proves the fact that a comprehensive audit of your server log files is crucial after launching the site.

2. Even after checking twice, you should check one more time just to be sure

I didn’t want to sound like a broken record here. All I’m really trying to say is that a site on a new CMS would often work differently, and that a few months can pass until a search bot will have the opportunity to crawl all pages on a big website. During that time, you should keep your eyes wide open so that all the URLs are being indexed correctly.

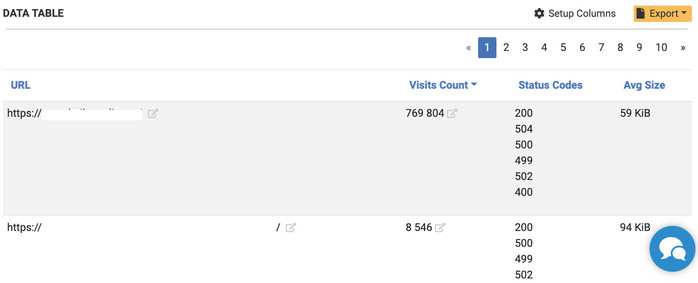

There was one time that our client’s site faced an issue that displayed a ‘blinking’ Status Code message. That is when, for instance, a bot crawls the page and gets a “200 Ok” code, then would crawl the site again and gest a “500” error.

If the site displays a bunch of blinking HTTP responses, then the bot will treat your website as unstable and demote it in SERP. Many SEOs shared a list of problematic URLs with developers who eliminated the reason for the issue in turn.

Results

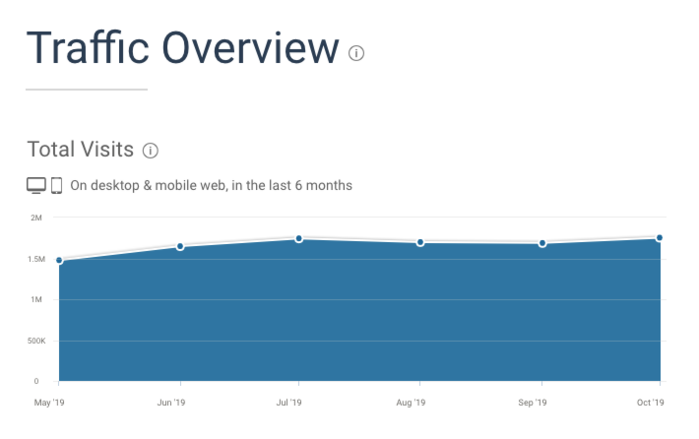

To quickly summarize the results, I will just show off the statistics of total organic visits in the last six months. Migration was made on July 1, 2019 as shown below:

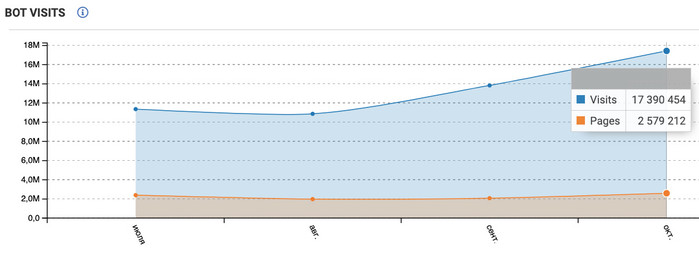

The number of visits that a bot has made on the site as well as the number of crawled pages has increased since July as shown below:

Source: data from server logs analysis

Bonus:

Would you agree that professional SEO advice from experts is worth its weight in gold? That’s the question I asked SEO influencers in order for them to name tech parameters that are crucial on the pre-launch stage. The following are the most useful insights:

- URL structure (as I would want the ability to leave the old structure)

- Ability to add/change attributes in the head on a page level (canonicals, meta-robots)

- The overall performance (e.g. in terms of speed)

Kristina Azarenko – SEO expert with 10 years of hands-on experience. Author of marketingsyrup.com

- Content migration

- URL & Content consistency (e.g. on-page, titles, meta)

- Redirects (most important if content changing)

- Site speed

- Robots.txt & Noindex

- Technical carryover or upgrades (if you have enhancements in place you want them to stay in place)

Jacob Stoops – Sr. SEO Manager at SearchDiscovery. Over a decade in SEO. Host of Page2Podcast

- Clean up navigation, url structure, hierarchy

- Export all url’s and matching keywords (also rankings)

- Redirect plan

- Change internal links (relative path)

- Validate each url has got a self referring canonical / or designated canonical

- Check if meta data and pagination is correct for new pages + still correct for migrated pages

- Update robots.txt file

- Change XML sitemap

- Check feeds to third parties (such as Channable f.e.)

- Validate SSL certificate

- Remove all unnecessary / old scripts

- As clean as possible page source (in terms of performance)

- Change / implement trackers as Analytics, GTM

- Change (if relevant) structured data information to new domain / setup

Marc van Herrikhuijzen. SEO strategist at BCC Elektro-speciaalzaken BV Also, Mark kindly gave his advice about Post-migration optimization. You can read his post in Technical SEO group on Linkedin

I’ll add: make sure the CMS doesn’t create fragments or nodes (or if it does, that you account for that). Common in Drupal, custom post types in WordPress, etc.

Jenny Halasz. SEO & Analytics Expert; President JLH Marketing. Professional Speaker

I’d probably add checking media behaviour (WP might create attachment pages for example), reviewing robots (some like Shopify are locked-down), XML sitemap behaviour

Matt Tutt Holistic digital marketing consultant specialising in SEO from Bournemouth in the UK

Don’t forget to renew old domains that have been migrated. These old domains often have lots of links pointing to them, and losing those is likely to cause HUGE ranking drops.

Steven van Vessum. VP of Community at Content King, contributor to SEJ, Content Marketing Institute, and CMSwire

To sum up

SIte’s migration is a complex process. That is when the data-driven SEO approach is a MUST. To make this task a piece of cake, divide it into two stages: BEFORE and AFTER the release.

On a pre-launch stage, conduct a comprehensive technical audit to find bugs that could harm your SEO on a new site’s version. Pay special attention to:

- website’s structure,

- interlinking optimization,

- redirects implementation.

On the after-launch stage, verify that all goes according to the plan by monitoring Google’s reaction and end-users visits trends. That will help you to detect technical issues and eliminate them as soon as possible.

As you can see, migration of a big site won’t be a nightmare if you approach this issue in a comprehensive way. This is when the analytics tool is absolutely necessary. I won’t name the best tool for technical SEO audit – I’ll just leave a link to a 7-day free trial.

Get more useful info: 8 Common Robots.txt Mistakes and How to Avoid Them

Wanna learn more? Here is how to optimize hreflangs and thus increase indexability. Case study