Why does the crawl of your website fail?

In this article, we’ll talk about why a crawl of your website can fail. There are several reasons why a crawl of your website may not finish. Some of the reasons may be due to the settings of your web server and the fact that your server perceives our crawler as a potential security threat. Other reasons may be related to the error on your website or to a specific page code. If error or non 200 status code is the reason for a failed crawl, search engines will also not be able to crawl your website. Therefore, we recommend checking why the crawl of your website could not finish every time it happens.

More information: How does the JetOctopus crawler work?

Below we will look at all the reasons why the crawl failed, and tell you how to fix the problem.

Your web server has blocked the JetOctopus crawler

If the crawl does not work, it is likely that your web server has blocked the JetOctopus user agent. Some websites automatically block all user agents that are running by an automated system. Also, the administrator of your website can block our crawler if he sees that the crawler is highly active and can overload your web server.

How to solve it? Contact your website administrator and let them know that you will be crawling the website. Submit a list of JetOctopus IPs to be whitelisted:

- 54.36.123.8

- 54.38.81.9

- 147.135.5.64

- 139.99.131.40

- 198.244.200.110

Also, you can reduce the number of threads or set up a scheduled crawl at a time when your website will have low traffic.

Read the article “How to run a scheduled crawl” to get more information.

If you use JetOctopus crawling with the selected GoogleBot user agent, your web server may block JetOctopus as an invalid bot. Many servers are checking user agents that emulate search engines. The web server sends a reverse DNS lookup to validate the GoogleBot. If it is not a real GoogleBot, then the user agent is blocked. In such cases, adding IPs to the whitelist will also help.

If you use CloudFlare or a similar service, you can manually configure an exception for JetOctopus. Read more in the article “How to crawl websites using Cloudflare with the Googlebot user-agent”.

Your website is blocked by robots.txt file

If the whole website is blocked by robots.txt file for scanning, JetOctopus will not crawl your site.

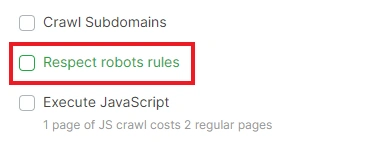

Therefore, if there are directive “User-agent: * Disallow: /” in the robots.txt file and you want to start a crawl, deactivate the “Respect robots rules” checkbox or add a custom robots.txt file.

How to properly use a custom robot.txt file, read in the article “How to configure a crawl“.

Your website is closed for indexing

If the “Respect robots rules” checkbox is activated and a whole website is closed from indexing in any way, the crawler will not be able to crawl the website. Deactivate this checkbox and start crawling again.

Our crawler has the same principle of work as search engines, so by default JetOctopus can only crawl the same pages which were scanned by GoogleBot. If the crawl doesn’t work, GoogleBot probably won’t be able to crawl your website either.

Your site returns a 4xx or 5xx response code

If the start URL (entered in the URL line in the “Basic settings”) returns the 5xx or 4xx response code, JetOctopus will not be able to continue crawling. After all, to continue crawling, JetOctopus needs links with <a href=> in the page code. And the whole code of non 200 pages is not available to search engines, crawlers or users. That’s why our crawler will not be able to find links to follow them.

If the start page has a redirect (301, 302, 307, 308, etc.), the crawler will go to the page to which the start page was redirected and will crawl this page and links in the code of this page.

Missing URLs in page code

JetOctopus will not be able to scan your website and crawling will stop if there are no internal links in the start page code and no sitemaps are available. Search engines will also not be able to crawl your website if there are not any links inside <a href=> elements in the homepage code.

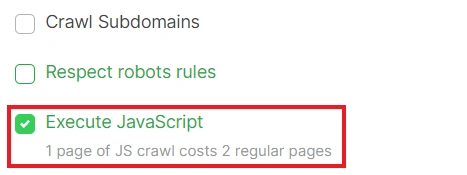

If the internal linking elements are rendered on the client side using JavaScript, we recommend starting JavaScript crawling. To do this, activate the “Execute JavaScript” checkbox.

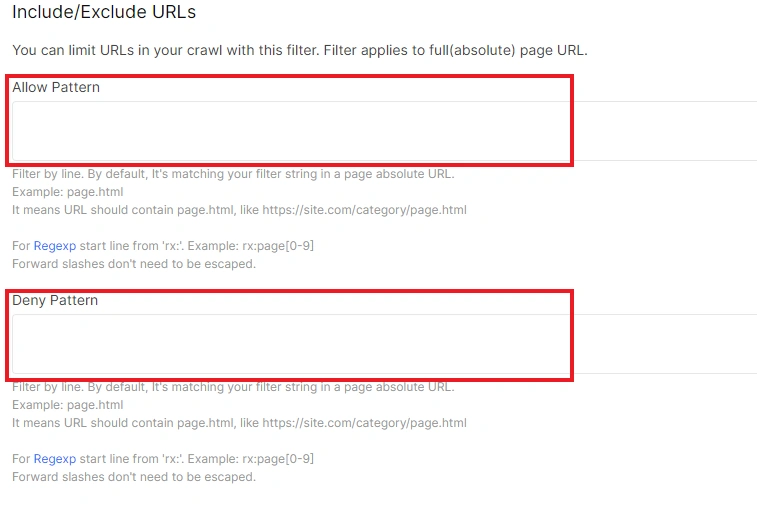

Incorrect Include/Exclude URLs

If you use the allow/deny pattern in the “Include/Exclude URLs” menu and our crawler does not find any URLs that match the rule, the crawl will fail. Check that the pattern is correct when using this option.

You crawl a staging website which is closed to search engines

If you need to crawl a staging website which is closed to search engines, configure the special crawl configuration.

More information: How to crawl a staging website with JetOctopus.

Note that if only one page is available to the crawler, such as the homepage, and all other pages are closed from scanning or returned 4xx or 5xx, the crawl will fail. If this happens, analyze the reason and solve the problem, because your website may be inaccessible to search engines for the same reason.