Product update. Alerts for advanced website monitoring

The JetOctopus team has released a long-awaited functionality. Now, you can set your own alerts to monitor the performance of your website. If something breaks, you will receive an instant notification.

All our customers can create an unlimited number of custom alerts, as well as use a lot of builtin notifications. We have prepared for you more than 30 builtin alerts that will help you quickly monitor the health of the website.

Advantages of alerts from JetOctopus

We know how difficult it is to track all changes on your website if you have many releases and updates. Sometimes it happens that the release changes a critical element for SEO. For example, the whole website becomes completely closed from indexing. It only takes a few minutes for search engines to crawl non indexable pages and to start dropping them out from search results. Therefore, it is important to notice such changes in time.

We also know that communication between SEOs and developers is often multi-layered. And as a result, you receive information about important releases too late.

That is why we created a powerful alert tool.

Advantages of our JetOctopus alerts:

- an unlimited number of custom alerts is included in all tariffs;

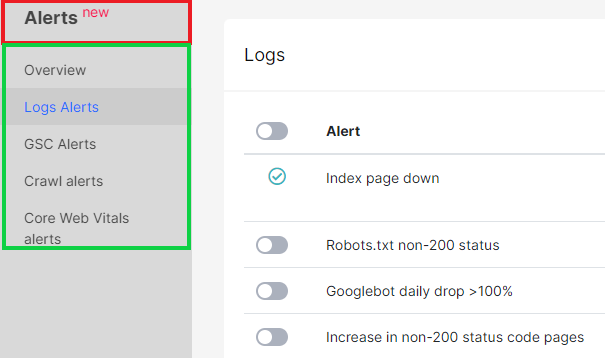

- ready alerts for monitoring problems with logs, Google Search Console, crawl, Core Web Vitals alerts;

- you can send notifications to many people, for example, to all SEO team members and the developers responsible for SEO.

Monitoring options

- Search engines crawl frequency monitoring – find out when search engines overload your web server;

- find out when search engines stop crawling your website at all;

- monitoring the scanning frequency of needed robots, for example, monitor an activity of GoogleBot Mobile;

- alerts in case of unavailability of the website, subdomains or custom pages;

- monitoring of impressions and clicks of top pages – follow the dynamics of the most SEO effective pages;

- set up monitoring for the most frequent keywords and get alerted as soon as your site’s position on this query falls;

- monitoring of status codes of pages;

- monitoring of 404 response codes;

- find out when the number of non-indexable pages increases;

- monitoring when canonical changes.

This is by no means an all monitoring option. You can customize the alerts for yourself.

Why SEO specialists need alerts

Alerts will help SEO specialists:

- monitor many sites simultaneously;

- reduce the amount of routine work;

- be aware of releases and instantly detect errors;

- monitor the dynamics of the website in the SERP;

- maintain a healthy site.