Product Update: Linking Explorer – a database on interlinking for each page

Interlinking is one of the most powerful tools for the technical optimization of a big web-site. Thanks to interlinking factors it’s easy to get the pages several points up in SERP for super long-tail keywords. On many web-sites we face up typical interlinking problems – non-important pages with too much weight, overspammed or insufficient anchors distribution, whole clusters of pages with just one leading link, and others.

One of the main problems when working with interlinking is links volumes. For instance, an average web-site with 1 mln pages may have around 200 mln links, and as you can guess it’s quite inconvenient to work with them via CSV files or Excel.

Most crawlers have such an indicator as “number of internal links to the page” – but the question is what links are those? How many of them are image links, how many are text ones, what anchors lead to the page, are those links from indexed pages or are they from non-canonical ones that add no internal weight?

We are glad to present Linking Explorer

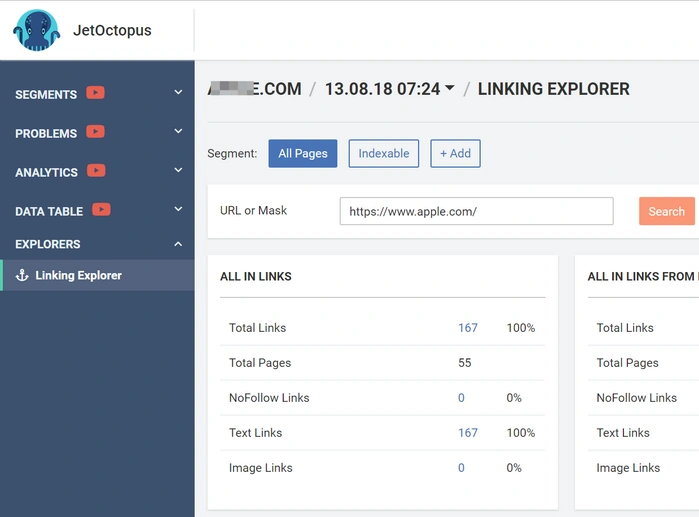

This is a new tool for interlinking analysis. It takes the data right from the link graph and shows aggregated information on incoming links, anchors distribution, what directories they come from, and click distance.

It can:

- Show information on a separate URL of a web-site http://site.com/buy-black-iphone-la or on URL mask http://site.com/*-iphone-*

The opportunity of working with URL masks makes your work with page clusters much easier.

Example: YOU sell iPhones, a wide range of models and colors. That means you’ve got lots of pages dedicated to iPhone. It is rather troublesome to check each link separately. Now you can simply set the “iPhone” URL mask and get a full picture on the whole iPhone cluster. - Show characteristics of all the incoming links – % nofollow, text and image links, unique pages

Importance, content, weight - Show the distribution of the anchors leading to the page

Google analyzes the content and semantics of a landing page by the anchors leading to it. With the help of anchors it is possible to expand the page semantics. And as you can guess, when analyzing the anchors leading to the page we can either increase this list or remove the non-relevant spammed anchors. - Show the distribution of the image alt attributes leading the page

Google analyzes the content and semantics of a landing page by image alt tags leading to it. With their help it is possible to expand the page semantics. So when analyzing this parameter we can either increase image alt or delete the non-relevant ones. - Show the distribution of incoming directories

It can show the directories of those pages that contain links to the landing page. Example: if the landing page is “Buy a chair in London”, then such incoming directories as “Buy a car in Edinburgh” are hardly acceptable. - Show the click distance from the main page

It can show the click distance from the index of those pages that contain links to the landing page. The click distance for important pages should be as close to index as possible.

Bonus:

- This tool can work in segments mode on the website.

For example: you create a segment “Cell phones” (URL contains “cell phone”). Staying in this segment Linking Explorer will show you the information on the links to the landing page exclusively from this segment. - You can also analyze external URLs.

For instance: you have a related web-site site.de and now you can see the way it is linked with site.com. That means you’ll get all the mentioned above data from the crawled website for the landing page on the external site.

Everything that you can see is clickable. Clicking a title you go to the Linking Explorer datatable where you can adjust all the necessary filters.

Conclusion

With Linking Explorer the process of interlinking becomes a thousand times easier. All the important information you need is presented in just one tool and with absolutely no limits!