A complete guide to crawl all subdomains with JetOctopus

When scanning a site with JetOctopus, you can configure the crawl of all subdomains. This will allow you to estimate the scale of the entire website, effectively find duplicate pages, and so on. By the way, sometimes during scanning you can find technical or staging subdomains that are located in the HTML code due to bugs. Therefore, we recommend running at least one crawl with subdomains.

How to crawl a website with subdomains

By default, JetOctopus does not scan your website’s subdomains. Let’s remind that everything that contains the main domain name and same DNS is considered a subdomain. So, https://en.example.com is the subdomain, but URLs like https://example.gb is a separate domain. JetOctopus will not scan such URLs (like https://example.gb), even if they are found in the page code.

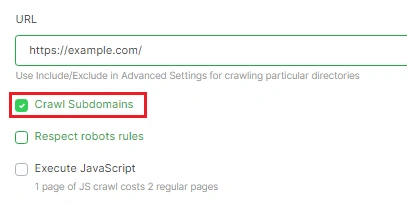

To start a crawl of all domains of your website, activate the “Crawl Subdomains” checkbox when setting up a new scan with JetOctopus. And in the “URL” field, enter the absolute address of your main domain: https://example.com.

More information: How to configure a crawl of your website.

When scanning subdomains, JetOctopus will use robots.txt rules created for every domain. That is, the crawler will go to https://en.example.com/robots.txt and https://example.com/robots.txt, remember all the rules and use them when scanning the corresponding domain.

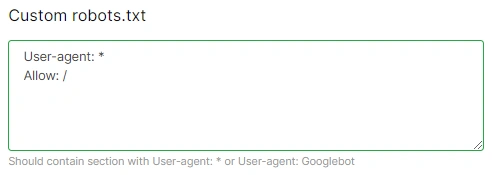

However, you can create a custom robot.txt file for all subdomains.

More information: How to use custom robots.txt to make the crawl more efficient.

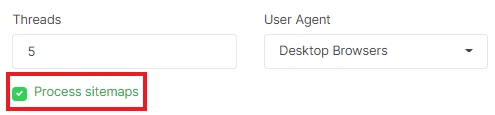

If you want JetOctopus to scan the sitemaps of all subdomains, activate the “Process sitemaps” checkbox.

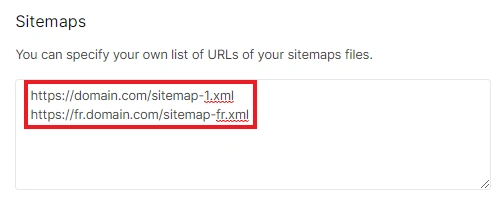

But if the sitemaps have a non-standard address, add links to the sitemaps of all subdomains in “Advanced settings” in the “Sitemaps” field.

Sitemaps will be processed by the crawler after all pages on all subdomains have been crawled.

You may be interested in: How To Audit XML Sitemaps

How to find a list of URLs for each subdomain

You will find a list of pages from all subdomains in the crawl results in the “Pages” data table. Pages from all subdomains will be displayed in this data table. All charts will also consider data for all subdomains. This will allow you to quickly find duplicate content on your website.

How to find all crawled subdomains

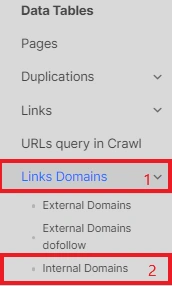

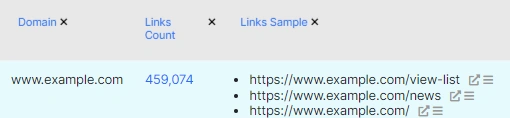

To see all the subdomains found by JetOctopus during the scan, go to the data table – “Links Domains” – “Internal Domains”.

In the results, you will see a list of internal domains and the number of pages of this domain.

Note that all subdomains will be considered external domains for your website in the “External Links” reports. That is, if you started the crawl from the https://example.com/, then the URLs with https://en.example.com/ (and all other subdomains) will be considered external.

My website has many subdomains, however JetOctopus only found a few of them. Why so?

Crawling starts from the start page, then all URLs that the crawler finds in the HTML code of it are scanned. If the links to internal domains are very deep in the site structure, JetOctopus may not find them before the crawl limits or the limits set in the “Pages limit” field expire.

If you are sure that the limits were enough, try to find the reason in the article “Why are there missing pages in the crawl results?”.

We also recommend paying attention to logs when analyzing subdomains.