Case Study: How DOM.RIA Doubled Their Googlebot Visits Using JetOctopus

DOM.RIA.com is a Ukrainian real estate marketplace. Thousands of ads for the sale and rent of real estate are added to the site every day. As the site structure is large and complex, to ensure the freshness and relevance of content appearing in Google search, there was a need to maximize the crawl efficiency of the site by search robots.

The term “search robot” (sometimes also referred to simply as a robot or “spider”) refers to any program that automatically detects and crawls sites by following links from page to page. Google’s main crawler is called Googlebot.

What is the task?

Real estate is an industry that depends on geography and location. Accordingly, pages have a vertical hierarchy (For example, city ⇾ district ⇾ micro-district ⇾ metro station and so on). And so, the promoted directories are not always within three clicks from the main one, which, in turn, makes it difficult for search robots to find and scan such directories.

Having studied semantics, we decided to expand the structure with such directories:

- real estate on city streets

- real estate on city lanes

- real estate by house number in the city

But it was not without problems. When the directories were first created, the pages were poorly indexed, and Googlebot rarely visited them.

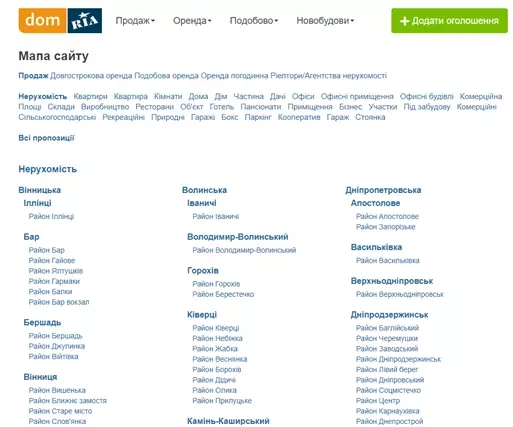

To make it easier for crawlers to access these pages of the site, we created an updated sitemap and placed it in the footer. It looks like a neat list of links.

DOM.RIA Sitemap

It may seem that the problem has been solved as all links are within 2-3 clicks from the main page. Not yet.

Since DOM.RIA is a sizable website, therefore, the sitemap is rather big — it contains several tens of thousands of pagination pages. But the bot, rather stubbornly, didn’t visit the pages on the sitemap pagination.

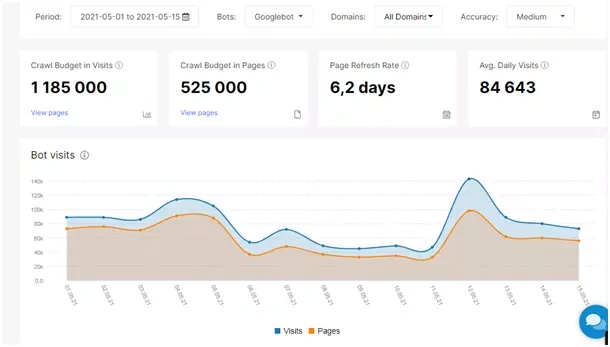

In 15 days, the bot visited:

- 525,000 street pages in total

- Only 10,000 metro pages

- Total of 196,000 district pages

Logs of bot visits to street directories

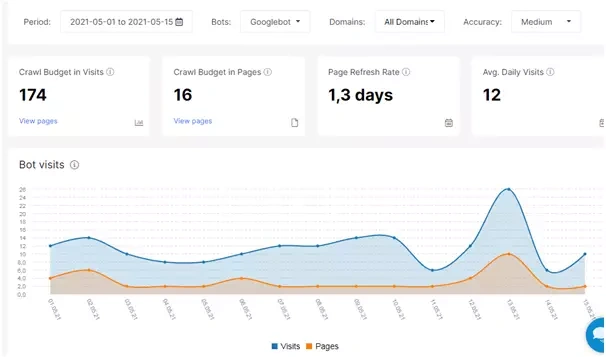

And only 16 pages from the sitemap were visited by the bot in 15 days.

Logs of visiting the sitemap by a bot

This is simply not good enough for us — these numbers are very little, given the geography of Ukraine.

Rethinking internal linking

With logs analysis using JetOctopus, we quickly knew that the search bot tends to visit the pages of cities, regions, and the like. For example, over the same period of time, Googlebot visited 1,048,000 real estate catalogs.

Logs of bot visits to real estate catalogs

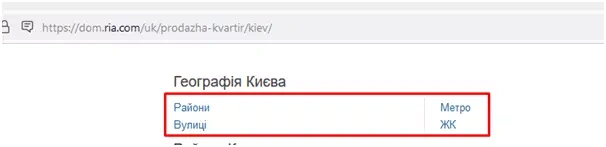

Based on this insight, we decided to create mini-sitemaps on each directory for the city, including URLs for streets, districts, metro, etc., which were poorly scanned.

We assigned them meta tags, and also added links to pages that Google often visits.

An example of how the pages were linked

At the same time, we removed the original sitemap.

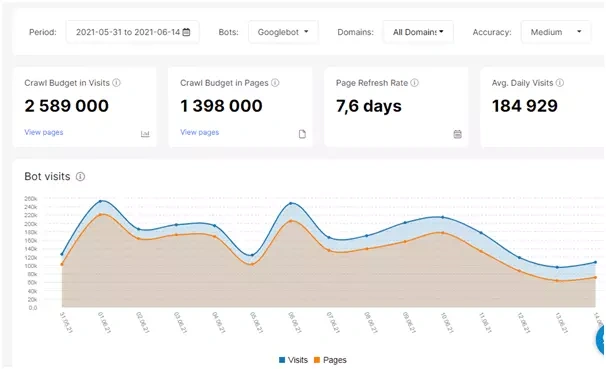

How have bot visits changed?

15 days after the changes, over the same period (15 days), we saw the following results:

- A total of 1,398,000 street pages (+ 266%)

- Only 23,000 metro pages (+ 230%)

- Total of 450,000 district pages (+ 230%)

Logs of the bot visiting street pages after the experiment

Future plans

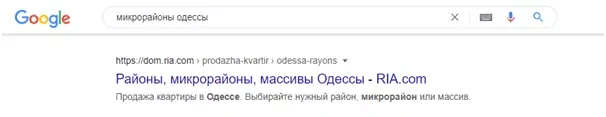

As a bonus, hub pages started ranking for a number of keywords and brought in click-throughs.

Google SERP page

This means that such pages are interesting to users. So our plans are to make not just a canvas of links, but full-fledged landing pages that will satisfy user requests. To do this, there are several tasks in the pipeline:

- Collect relevant semantics and optimize meta tags accordingly

- Improve the design and navigation

- Supplement pages with relevant content

- Continue optimizing the internal linking structure