Top Insights from JetOctopus Big Data Research

Recently, Serge Bezborodov, CTO of JetOctopus, shared on Twitter some interesting insights about how the Internet works. In this article, we will tell you the main ideas and conclusions of this research. After all, by understanding how the Internet works, you can improve your SEO.

What data were researched

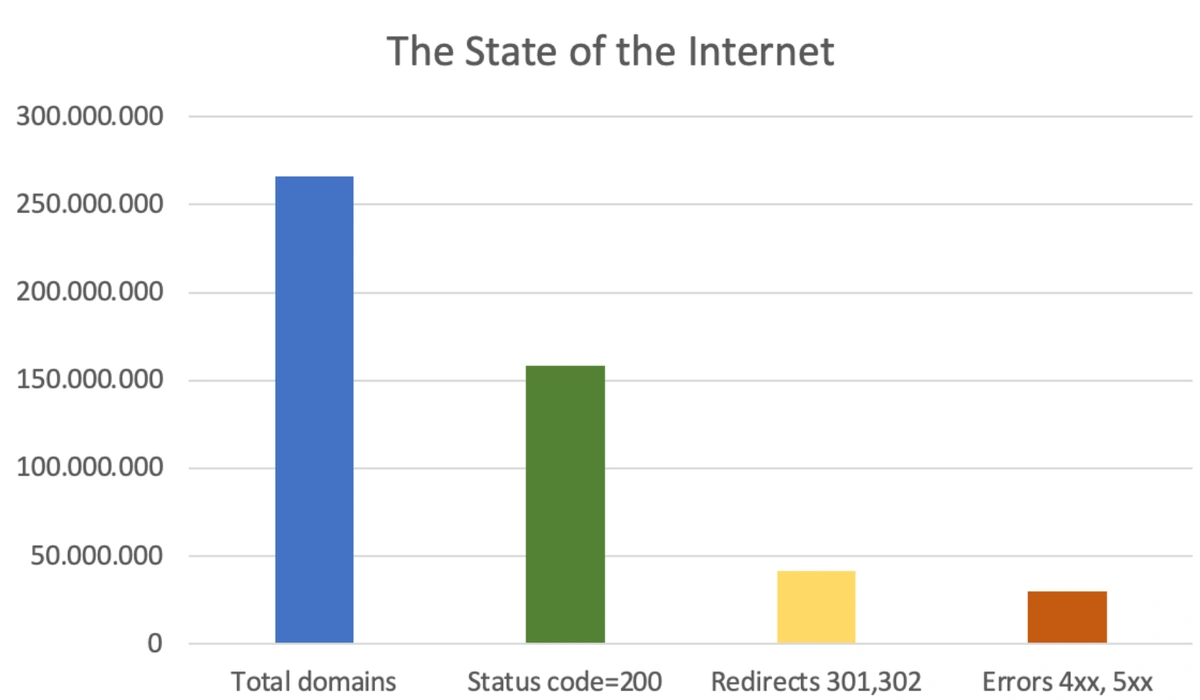

During the research, JetOctopus scanned 266 mln. domains. In fact, twice as many domains were scanned, because we also scanned domains with and without www. As Serge Bezborodov said, such researches help debunk SEO myths (or not) and improve JetOctopus scanning algorithms.

JetOctopus did similar research two years ago. And in 2020, we scanned only 252 million domains. That is, over the past two years, the number of domains has increased by no less than 14 million domains.

More information: How we scanned the Internet and what we’ve found (2020)

During a large Internet scan, we checked domains like example.com, then we checked these domains with HTTPS and HTTP protocols, and domains like www.example.com. If at least one returned a response, we checked the robots.txt file. ALso, we checked IPs.

Data

Among all scanned data, 59% returned 200 OK status codes (that’s 158 million).

42 million domains had a permanent or temporary redirect to another domain.

And another 30 million domains had a client or server error, i.e. returned a 4xx or 5xx response code.

In the screenshot you can see a comparison of all domains, “live” domains and domains with non-200 status codes.

Of course, some domains were very dynamic. Therefore, during the scanning process, some domains changed their status code. But given the number of domains we had to scan, it was expected.

Serge Bezborodov also highlights an interesting fact: 16% of scanned domains (43.6 million) are parked domains. A parked domain is a domain name registered but not connected to an online service like a website or email hosting.

Parked domains are usually used by websites that care about their brand. For example, if a brand has a recognizable name, it buys domains with similar names. This is also done for security purposes, because users can confuse a real name with a similar one and give their data to attackers.

IP neighbors

There is a hypothesis that a shared IP address can harm SEO if one of the sites has malicious content. But the situation is somewhat different. In the process of our big data research, we discovered that there is a very small amount of IPs that are used for public websites’ IPs.

All websites on the Internet are located on only 18 millions public IPs. On average, every website has 13 neighbors. Of course, there is a very high probability that one of the websites will have low-quality or malicious content.

Robots.txt analysis

All SEO specialists take care of the robots.txt file. This file is needed to control the crawling of your website by search engines and other bots.

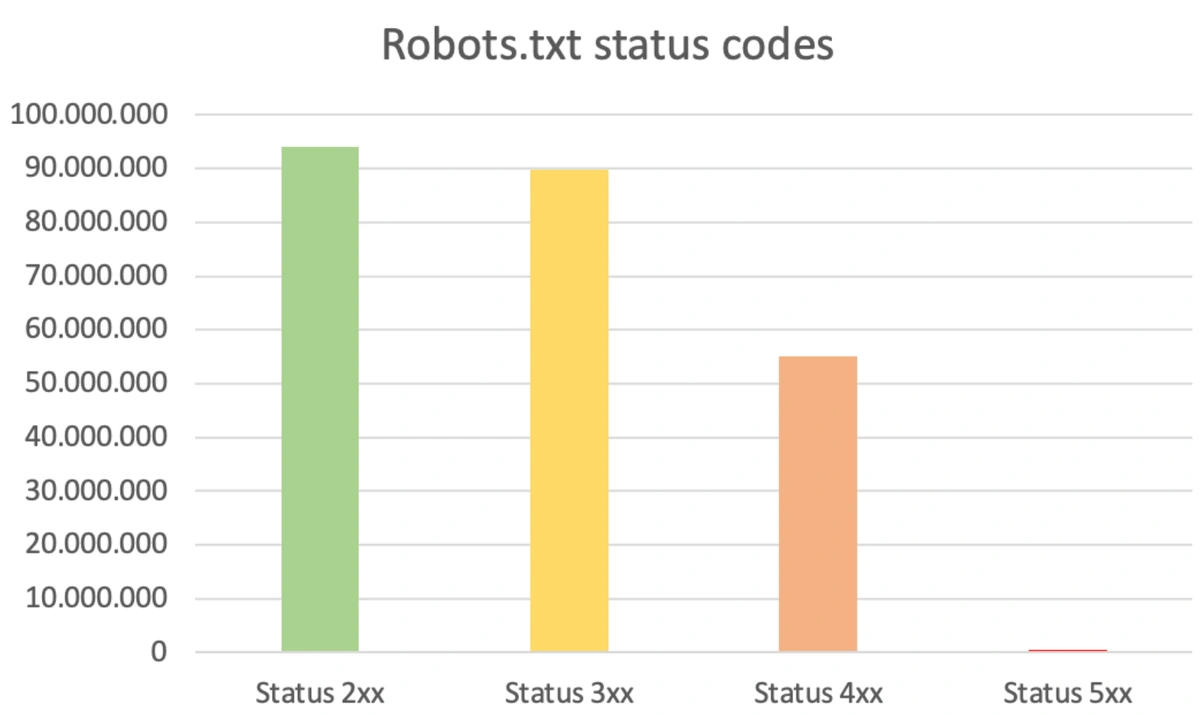

But only 93 million websites have robots.txt (58%). The average file size is 667 bytes.

Other websites did not have a robots.txt file. Part of robots.txt returned 4xx or 3xx response codes. And another 620,000 domains had a robots.txt file that returned a 5xx status code.

If robots.txt returns a 5xx status code during a bot visit, it means that scanning of your website is completely prohibited.

WordPress

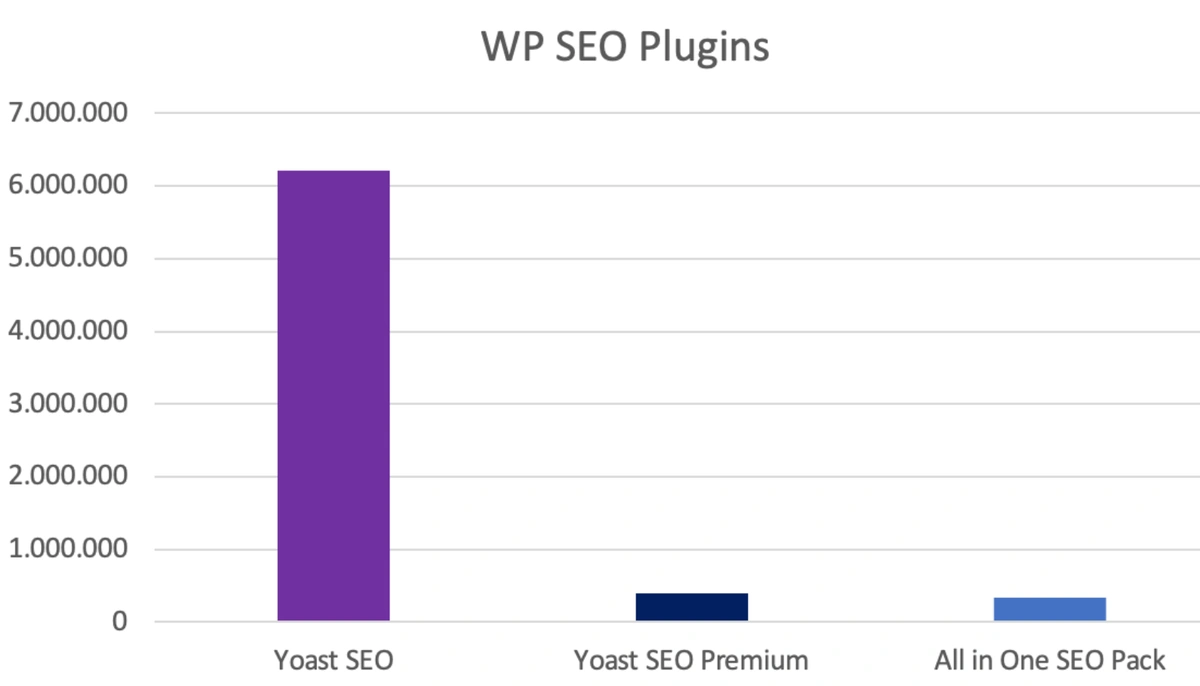

Approximately 14% of websites (21.6 million) use WordPress. Various plugins are used to optimize WordPress websites. The most popular turned out to be Yoast SEO – 6.2M installations, and almost 400k with the Premium.

All in One SEO Plugin is used by 333,000 websites.

PHP

We detected by analyzing server header (X-Powered-By header), that PHP works at least on 35.4M domains.

By the way, 7.1 million websites use CloudFlare and 430000 use Fastly CDN. CloudFlare has several features that can affect website security as well as SEO. In particular, CloudFlare can affect site speed. Fastly CDN also has the following features.

Wix installations (on non-wix domains) have 800000 websites.

Backlinks

69M index pages have external backlinks. From this fact we can conclude that backlinks are extremely valuable for any website. For search engines, high-quality external links are a ranking signal. Quality backlinks can improve PageRank.

You can check the external links on your website using JetOctopus. Make sure you are not giving your PageRank to the wrong websites.

More information: How to audit external links with JetOctopus.

Redirects

Redirects are used to redirect (forward, transfer) customers from one URL address to another. For example, redirects are used when migrating domains, changing URL addresses, deleting pages, etc. If the migration or change occurred permanently, use a 301 or 308 redirect. If the change was temporary, a 302 or 307 redirect is used. Also, many websites buy drop domains, competitors, etc, and put redirects on them.

During our large Internet scan, we found 24.5 millions websites with 301-redirect pointing to 9.5M domains. What does it mean? This means that many websites had domain migration and used drop domains. Like backlinks, redirects are also a powerful signal to Google and can impact your SEO.

You may be interested in: How to check redirects during a website migration with JetOctopus.

Marketing tools

While analyzing the robots.txt file, we found that many websites block their website from being crawled by various marketing bots. It’s done for many reasons. Like reducing server load (they can crawl hard, with more requests than Googlebot), hiding links from competitors, etc.

However, blocking marketing bots with the robots.txt file is not the only way to disallow website crawling. There is even a theory that marketing bots ignore the robots.txt file. That’s why many websites use user-agent blocking. If the user agent matches the marketing bot, the website returns a 4xx status code or a timeout.

By the way, most marketing bots follow the rules in the robots.txt file. We know this from log analysis.

The total amount of ‘hidden from crawling by marketing tools’ websites is about 40 millions. This means you don’t see the backlink data from every fourth website on the Internet. An interesting insight is that according to our previous crawl, this number is doubled in 2022.

In addition to marketing bots, your site can be overloaded by fake bots. These bots emulate the user agents of Googlebot or other search engines. And such fake bots ignore robots.txt files. Therefore, we recommend analyzing their activity and blocking them in various ways.

More information: Fake bots: what it is and why you need to analyze it.

Conclusions

This is only a small part of the data. We are very interested to see how the Internet has changed since 2020. But basic signals like backlinks and redirects are still important.

Join the discussion on Serge Bezborodov’s Twitter if you want to receive other interesting data.