Simple Lifehack: How to Create a Site That Will Never Go Down

Recently we’ve read the sad post on Facebook about sudden hardware malfunction. There was no one to help, and cunning competitors used to submit URL tools and as a result, the website was overthrown from the TOP organic search positions. The conclusion: the website needs to be monitored more thoroughly and the bugs fixed without delay. Today we want to present the other way to address such problems.

It’s not a secret that even the most famous high-quality websites break down from time to time. Unfortunately, ordinary websites for small businesses can break down several times a year, making big troubles for webmasters. SEO agencies and websites owners constantly try to use new methods of monitoring with the aim of finding bugs as soon as possible. But what to do if admin rests on the Caribbean islands and the programmer doesn’t pick up his phone at 3 a.m? Is this a familiar situation for you?

What if I told you, that you could reverse the situation: your webpages will work 99.99% in a year like market leaders’ websites? You don’t believe me, do you? I will explain in simple words how to make this come true.

The key concepts:

At first, a few words about how sites work. An average site, for example on WordPress, the online shop on opencart, etc., usually contains two to three parts:

- PHP app. It is the business logic of your site, which works with database

- Database. Here all data is gathered: items, categories, orders, payments…

- Cash (not always exists) – a temporary base, that works faster than an ordinary database and contains frequently used data. For instance: week top-sales, the latest commentaries, etc.

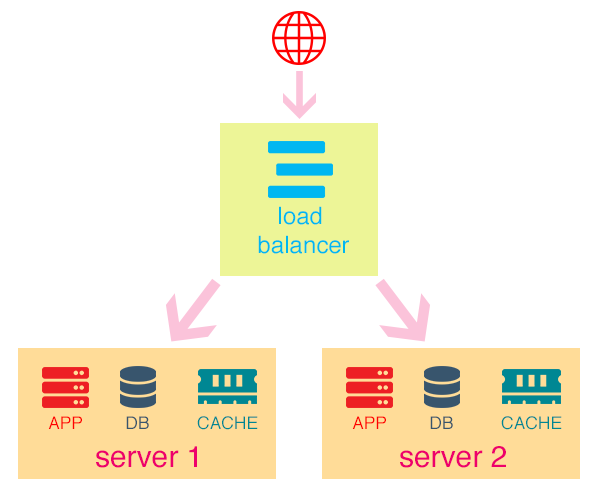

All these components could be stored on one physical server or on different servers. Also, several groups of the components could be stored on one server like on shared hosting.

The main reasons why sites usually go down (cases when programmer deployed something to a production server that wasn’t taken into account):

- The server is down or unplugged. For instance, hard disk suddenly broke down, cleaning lady occasionally yanked the wire, etc.

- Limited resources are ended (free space on a disk, random-access memory).

- Technical bugs in app. It happens that a website goes down because of small changes in load balance (for example, you created a new sitemap.xml, sent it for Google crawling and search bots gathered on your websites, the programmer chooses the type of database fields incorrectly, and your 32768 order won’t be added to the system, etc.)

The list of such reasons could be endless. Even a junior programmer can tell you about such bugs during long hours.

The Key to success

For the full understanding of how our sites could work without any issues, let’s pay attention to the industry where stability has the most crucial importance. Were talking about aviation. The aviation industry has evolved a long way, from low-powered crop dusters to modern huge airplanes. Stats shows that planes are the safest mode of transport, that was ever created by humans. What is the secret of aviation stability?

First of all, all crucial systems must be duplicated. A few brake lines, two independent on-board computers, a separated fuel feed system, and two pilots. The same approach should be used for website development. First thing to do: add website copy to the other host, data center, or even on the other continent.

Back in old times, it just looked like www1.site.com, www2.site.com and you could get to one website version or to the next one. Times have changed and now just www.site.com exists that refers to one server or to the other.

What is a load balancer server?

The load balancer server is a separated server, on which an app is run, (Nginx, for example). This app distributes traffic according to a certain algorithm. If the main server is down, all traffic is switched to the second server and vice versa. This way, the only fail point is the load balancer server. In practice, load balance is a very reliable system, which rarely goes down. But load balance could also be duplicated for your peace of mind.

Data Base

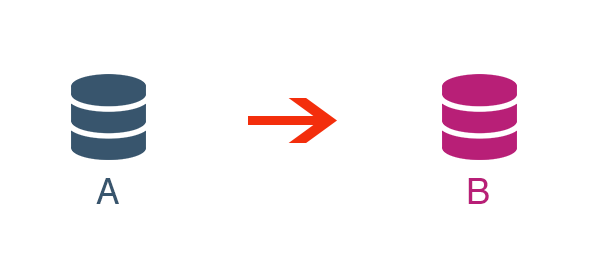

The next problem has appeared: now we have two website copies on the two servers. If you want to refresh a content, you should do it on two servers. You waste your time and efforts on a monkey job. Fortunately, the way out is near – replication of the database.

Let us explain in simple words: there is the main DB on the A server and there is additional DB on the server B. When some changes are implemented on the A server (blog post, item, order, etc.), server B almost immediately gets the copy of new data. Accordingly, DB are similar and consistent.

We have a very old and complicated system

Very often old websites contain a variety of bugs, and it not easy to make a copy of these websites and duplicate them on other servers. In this case, try to use a failover solution.

- The copy of the website is made, all excess is removed. Now we have the basic version only, with the key functionalities (for instance, without the ability to leave comments). DB doesn’t synchronize automatically, but manually once a week or month.

- If you can’t use the previous variant, create an HTML website copy. A load balancer should be configured in the following way: if the main server doesn’t send a response code within one minute, all your traffic is sent to the additional server. This approach gives valuable time to the programmers to fix bugs on the main server.

Technical staff said that they couldn’t make this system

In my experience, I’ve faced webmasters with the different levels of knowledge, but they always could understand at least the failover method. You just need to find a qualified specialist.

How much does it cost?

Maybe you think that the implementation of such a system is very expensive, but it’s not true. Almost every VPS with 1-2 GB memory storage can be used for load balancers. For instance, we use a load balancer on VPS from DigitalOcean 2 GB for $10 monthly on one of our projects. The cost of the server depends upon the requirements of your website. The simple math works here. What is more expensive: buy an additional server or website downtime?

To sum up

At first glance, it’s hard to increase the website reliability, but in fact, almost every website could be optimized without Herculean efforts. There are a lot of guides about load balancer setups on the Internet. It’s not a difficult task for the middle system administrator. I hope you will never complain about competitors who benefit from your website downtime and improved their positions in Google!

Wanna learn more? How to prepare your site for voice queries

Check out the case: 2 Different Realities: Your Site Structure & How Google Perceives It