6 ideas to improve your SEO with segmentation of crawl results, logs and GSC

Segmentation is a great way to drill down into your SEO. And it doesn’t matter whether you have a large site or a small one, segmentation will help you understand the current state of different types of pages, and will also help determine the potential of your website. In this article, we will talk about some ideas that will help you segment your website for great insights. We recommend using not only crawl results, but also logs and data from Google Search Console during segmentation.

How segmentation works in JetOctopus

When you, with other marketers and analysts, analyze your customers, you are also engaged in segmentation: analyzing where your customers are from geographically; what devices and browsers they are used; whether they are new customers, regular customers or those who occasionally visit your website. These are user segments. And it helps to build a better growth strategy. And the same can be done with URLs. So you will be able to find the strengths and weaknesses of SEO and build a strategy more precisely with URLs segmentation.

Segmentation is a way to divide your website into smaller parts by grouping URLs. You can create a segment from any pages:

- create a segment from pages that contain /product/, /category/, /page/ in URL address to track the performance of these pages;

- create a segment from /fr/, /en/ pages to track errors on different language versions;

- you can also create a bucket of exact URLs to track your split testing progress (that’s right, you can upload up to 10,000 exact URLs to create a segment);

- add URLs from logs and from Google Search Console in separate segments, and so on.

Using JetOctopus, you can create as many segments with as many rules as you need. For convenience, all segments can be divided into groups with understandable names.

More information on how segments work: How to use segments.

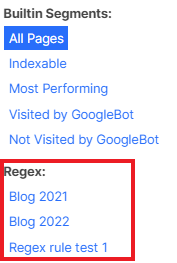

Idea 1. Use built-in segments

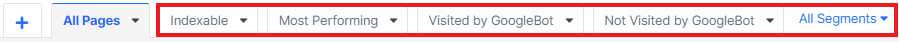

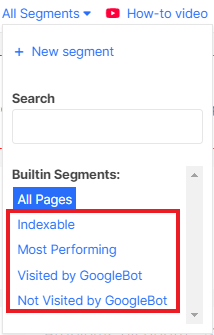

Our first recommendation is to start working with built-in segments. When crawling, analyzing logs or data from Google Search Console, JetOctopus automatically creates several segments from your URLs. We have selected the most necessary metrics for SEO specialists, and we suggest analyzing these segments first.

So, JetOctopus automatically creates such page segments:

- Indexable URLs;

- Most Performing pages;

- Visited by GoogleBot;

- Not Visited by GoogleBot.

You can select these segments by clicking on the button “All segments” – “Builtin Segments”.

Please note that the last three built-in segments will be available to those who have integrated Google Search Console and logs. And those who have at least one completed crawl can choose the indexable URLs segment.

Of course, SEOs are primarily interested in how effective indexable pages are. After all, if the page is indexable, we probably want it to bring traffic. With just 1 click, you can easily check how your indexable pages are performing, what technical issues there are on these pages, and what kind of internal linking these pages have.

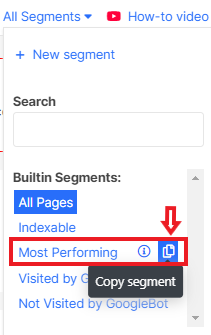

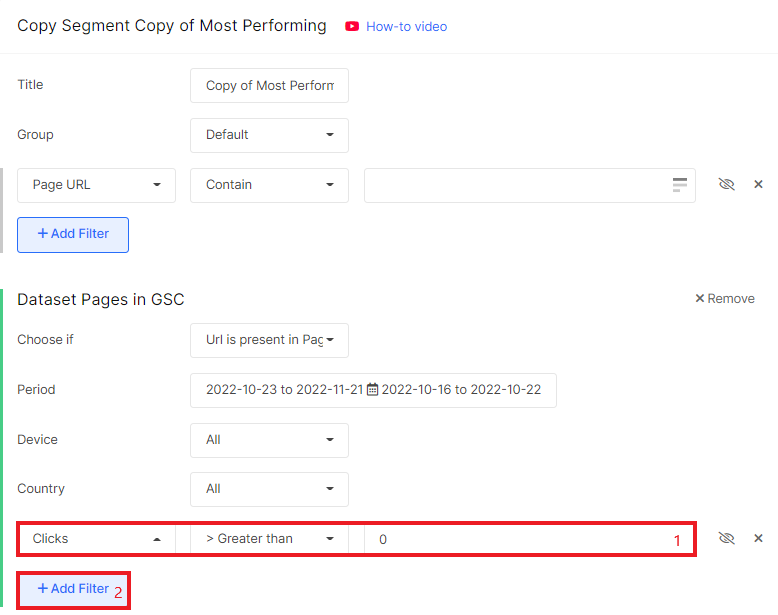

The “Most Performing” segment contains the pages that are the lifeblood of your website. These are the URLs that were found in the crawl results, visited by search engines and displayed in Google SERP. By the way, you can add another filter in the segment to select those pages that bring you traffic. To do this, copy the “Most Performing” segment.

Next, configure the appropriate filter in the “Pages in GSC” dataset and set the required number of clicks.

The “Visited by GoogleBot” segment allows you to single out those pages that were visited by Google search robots. This segment displays URLs visited by both smartphone and desktop search engines. What insights can we find by analyzing this segment of URLs?

First, we can highlight pages with technical issues. For example, if search engines visit pages with canonical pointing to non 200 URLs, then with a high probability these URLs will be dropped from the index.

Secondly, we can analyze the technical metrics of this segment: what is the load time, what is the page speed and performance, etc.

Thirdly, URLs from this segment are potentially traffic-generating. These pages are probably well interlinked because search engines were able to find it in the code and crawl it. But if in this segment there will be URLs with query parameters, non-indexable pages and pagination, then it is worth reviewing the internal optimization and directing your efforts to optimizing the crawling budget (we recommend reading the Logs Analysis For SEO Boost article). After all, it is better for search engines to scan indexable pages and rank them. If URLs that are not valuable for users are scanned, there will be less organic traffic.

The situation is similar with “Not Visited by GoogleBot” pages. We recommend that you analyze whether there are pages in this segment that you would like to include in the SERP. If search engines do not visit a page for a long time, this page will not be ranked in Google SERP. You can also set the desired period to check whether the crawler has visited new pages or URLs with updated content.

As you can see, using built-in segments, you can get a lot of insights about the health of your website.

Idea 2: Set up alerts for each segment

Using JetOctopus, you can set up alerts that notify you of important changes on your website. It can be a decrease in the activity of search robots, a change in indexing rules, an increase in the speed of loading pages, etc.

More information about alerts: Guide to creating alerts: tips that will help not miss any error

Webinar: ALERTS in JetOctopus or now you can sleep well (video)

But it is not always advisable to monitor all the pages of the website daily. Therefore, we recommend separating the most important pages of your website into separate segments and setting up special alerts for each segment.

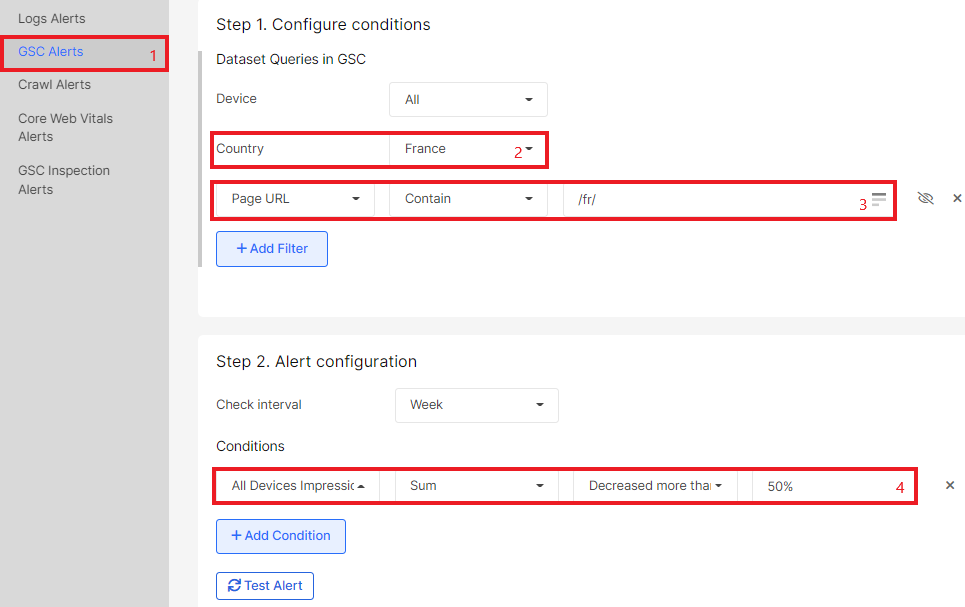

For example, allocate indexable pages from different localized subfolders into separate segments. One segment will contain URLs with /fr/, another with /en/, and so on. Then set up an alert to notify you if the number of clicks or impressions for URLs with /fr/ drops by 50% for users from France. This can be done using Google Search Console alerts.

On large projects, different types of pages may be handled by different teams of developers. And releases may not coincide in time. For example, one development team might make daily releases for the home page. Instead, releases and updates for categories are the responsibility of another team, and releases can happen once a week. Therefore, separate these types of pages and set up alerts for the most critical SEO metrics and HTML elements for separated segments. It can be indexing rules, canonicals, page loading speed, load time and much more.

Idea 3. Use regex and configure exclusion rules

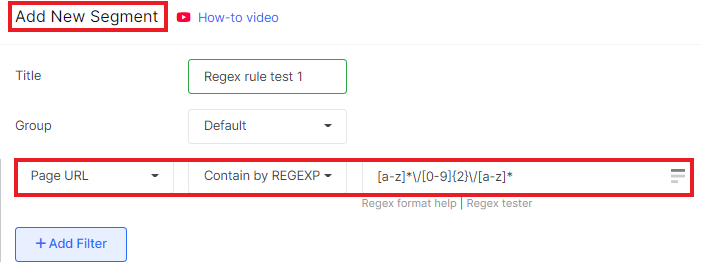

Segments are a very flexible tool. You can customize the segment using regex or regular expressions. Regular expressions are special rules for extracting fragments from the text of URLs. For example, using regex [0-9]*, you can select pages that contain any amount of numbers in the URL. It can be https://example.com/1, https://example.com/page-345 and https://example.com/category/123 and so on. And using regex [a-z]*, you can segment all URLs that contain any number of Latin letters.

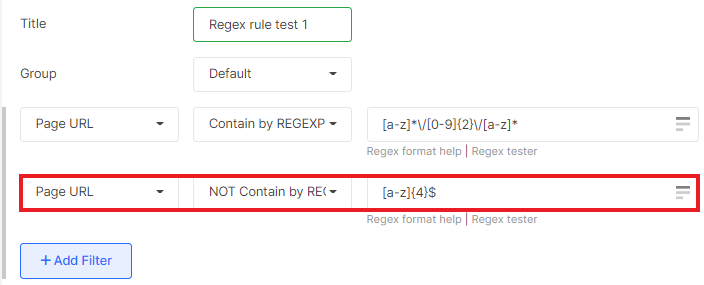

It is necessary to remember that consistency is important in regular expressions. That is, if you use the regex [a-z]*\/[0-9]{2}\/[a-z]*, it will mean that only those URLs that contain the following three groups of characters in sequence will be in the segment: any number of Latin letters, slash, 2 numbers, slash, any number of Latin letters. The following URLs will be eligible for this rule: https://example.com/category/12/filter-123, https://example.com/product/22/review-by-john. But the URLs https://example.com/category/12/12-filter will not fall into the rules (the sequence is not saved here). And URLs like https://example.com/category/1235/filter-123 will also not be displayed in the segment (there are more than two numbers between two groups).

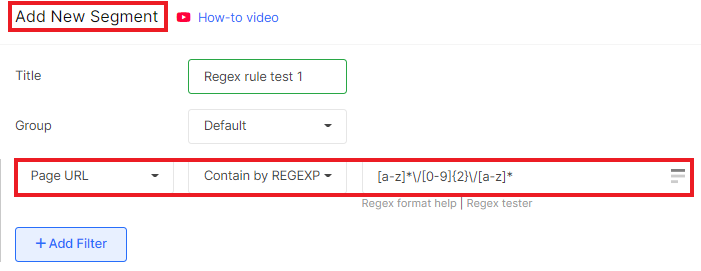

To use regex, create a segment, select the filter “Page URL” – “Contain by REGEXP” – then enter the regex.

Be sure to check the regex rule. You can use the site https://regex101.com/ to test it. Also, check if the required URLs are included in the segment. To do this, go to the “Pages” data table and select the desired segment. Check that the page list contains all the pages you need.

Please note that one URL can fall under two rules (can be in several segments at the same time). So configure exclusion rules. You can use a variety of exclusion rules, even regex. For example, you can filter out URLs that don’t contain /page/, or URLs that don’t contain /comment/, etc. You can also use a regex to exclude pages from a segment. To do this, select the filter “Page URL” – “NOT Contain by REGEXP” – then enter the regex.

There can be many rules for creating segments. You can use two or more regex rules in your segments, and you can use many exceptions.

Idea 4. Create separate segments for logs, crawl and Search Console

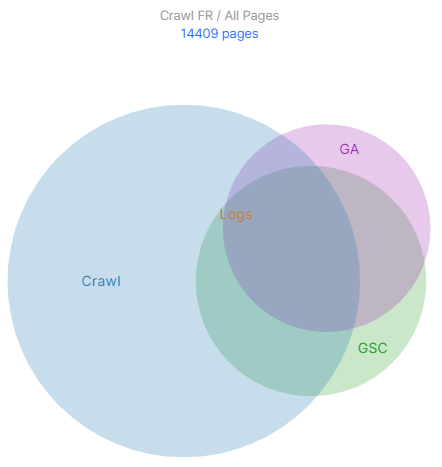

Go to the “SEO efficiency” chart. Are all crawled pages visited by search engines and ranked in SERP? For many websites, this situation will be typical: only a small part of URLs will pass through this traffic funnel started with crawling.

The URLs visited by GoogleBot can be very different from pages found during the crawl. Therefore, we recommend creating several separate URL segments for logs and crawling. For example, if crawlers are scanning old URLs with 301 redirects after the migration, create a segment of those URLs to track how the crawling by bots of the old URLs changed. These pages may not appear in the crawl results but search bots can visit old URLs very often.

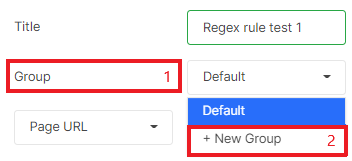

For convenience, we recommend creating different groups of segments. Go to the segment creation and enter the name of the group in the “Group” field or select an existing one.

Groups of segments will be conveniently displayed in the upper menu, so you can easily select the desired segment for analysis in different sections.

The segments you create will be available in each section: Logs, Crawl and GSC. But our main idea is to highlight specific segments for each section.

Idea 5. Use segments to create beautiful charts

Using segments, you can analyze the performance of URLs in that segment using all the charts and visualizations in JetOctopus.

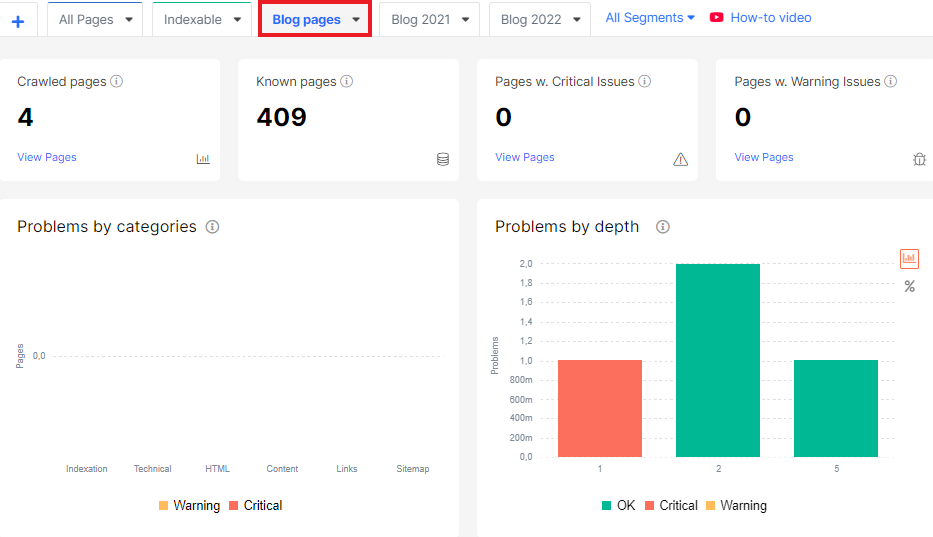

For example, create a segment with all blog articles – URLs that contain /blog/. Next, go to any report with charts and select the desired segment at the top. All charts will now display data only for the URL segment you selected.

It’s a great way to compare the performance of different types of pages and to prioritize tasks based on data. All reports in JetOctopus can be viewed for each segment.

Idea 6. Get information about search results for different devices

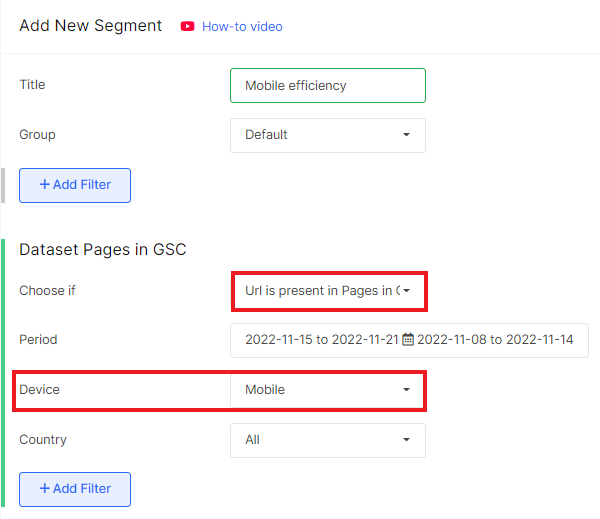

The number of clicks and impressions, as well as the average position of pages may be different for different devices. But in recent years, the tendency to use mobile devices for shopping and viewing information on the Internet is increasing. Therefore, it is important to monitor how effective your website is for different types of devices. We recommend creating two URL segments.

To do this, you need to connect Google Search Console (GSC integration video). Next, click the “Create segment” button, create rules for the required URLs and add “Present in Google Search Console” filter. The next step is to select the desired device.

With the help of such segmentation, you will be able to find a correlation between the performance of the website for different types of devices and the technical condition of the website. For example, if the CTR for smartphones is lower, check the Core Web Vitals metrics for mobile devices. Or compare the load time. This is a great option for finding insights.

Bonus ideas

As we said, you can create as many segments as you want. Here are some more ideas for what segments you can create to search for insights and for deep data analysis:

- create URL segments that are effective in different countries;

- create URL segments that are crawled by different types of search robots and analyze whether this affects the performance of the pages;

- separate the URLs that contain the desired domain;

- URLs that contain pagination;

- URLs that contain get parameters (even if these pages are not in the crawl results, search engines can actively visit it);

- highlight different types of pages (product, home page – exact URL, categories, filtering, etc.)

- filter pages by date (for example, articles that were published in 2022, 2021, etc., if the URL has a year);

- highlight pages by the name of something (for example, highlight pages that contain “iphone” or “alabama”, “kyiv” in the URL and so on; this allows you to see the effectiveness of such URLs;

- create a segment of pages that rank for certain keywords;

- highlight pages that are effective in certain countries, etc.

Segmentation allows you to get detailed statistics for different pages and highlight what works for one type of page, but is less effective for another type of URLs. Using segmentation, you can discover very unexpected reasons why traffic is falling or, conversely, why a certain type of page is growing.

We recommend reading additional articles about segmentation:

Segmentation. How to analyze website effectively

Segmentation. An exhaustive webinar about data merge (video)

Segmentation SEO For eCommerce Website: How to Skyrocket Your Rankings